Logging#

The following logging guidelines are best practices discovered through implementing logging services and modules within PyAnsys libraries. Suggestions and improvements are welcomed.

For logging techniques, see Logging HOWTO in the Python documentation. These tutorials are particularly helpful:

Logging overview#

Logging helps to track events occurring in the app. A log record is created for each event. This record contains detailed information about the current app operation. Whenever information must be exposed, displayed, and shared, logging is the way to do it.

Logging is beneficial to both users and app developers. It serves several purposes, including these:

Extracts some valuable data for users to know the status of their work

Tracks the progress and course of app usage

Provides the developer with as much information as possible if an issue happens

The message logged can contain generic information or embed data specific to the

current session. Message content is associated with a severity level, such as INFO,

WARNING, ERROR, and DEBUG. Generally, the severity level indicates the

recipient of the message. For example, an INFO message is directed to the user,

while a DEBUG message is directed to the developer.

Logging best practices#

The logging capabilities in PyAnsys libraries should be built upon Python’s standard

logging library. A PyAnsys library should not replace the standard logging library

but rather provide a way for both it and the PyAnsys library to interact. Subsequent

sections provide best logging practices.

Avoid printing to the console#

A common habit while prototyping a new feature is to print a message into the

command line executable. Instead of using the common print method, you should use a

stream handler

and redirect its content. This allows messages to be filtered based on

their severity level and apply formatting properly. To accomplish this, add a

Boolean argument in the initializer of the logging.Logger class that

specifies how to handle the stream.

Turn on and off handlers#

You might sometimes want to turn off a specific handler, such as a file handler where log messages are written. If so, you must properly close and remove the existing handler. Otherwise, you might be denied file access later when you try to write new log content.

This code snippet shows how to turn off a log handler:

for handler in design_logger.handlers:

if isinstance(handler, logging.FileHandler):

handler.close()

design_logger.removeHandler(handler)

Use app filters#

An app filter shows all its value when the content of a message depends on some conditions. It injects contextual information in the core of the message. This can be used to harmonize message rendering when the app output varies based on the data processed.

Using an app filter requires the creation of a class based on the logging.Filter

class from the logging module and the implementation of its filter()

function. This function contains all modified content to send to the stream:

class AppFilter(logging.Filter):

def __init__(self, destination=None, extra=None):

if not destination:

self._destination = "Global"

if not extra:

self._extra = ""

def filter(self, record):

"""Modify the record sent to the stream.

Parameters

----------

record :

"""

record.destination = self._destination

# This will avoid the extra '::' for Global that does not have any extra info.

if not self._extra:

record.extra = self._extra

else:

record.extra = self._extra + ":"

return True

class CustomLogger(object):

def __init__(self, messenger, level=logging.DEBUG, to_stdout=False):

if to_stdout:

self._std_out_handler = logging.StreamHandler()

self._std_out_handler.setLevel(level)

self._std_out_handler.setFormatter(FORMATTER)

self.global_logger.addHandler(self._std_out_handler)

Use %-formatting for strings#

Although using the f-string for formatting most strings is often recommended, when it comes to logging, using the former %-formatting is preferable. When %-formatting is used, the string is not evaluated at runtime. Instead, it is evaluated only when the message is emitted. If any formatting or evaluation errors occur, they are reported as logging errors and do not halt code.

logger.info("Project %s has been opened.", project.GetName())

App and service logging modules#

PyAnsys libraries use app and service logging modules to extend or expose features from an Ansys app, product, or service, which can be local or remote.

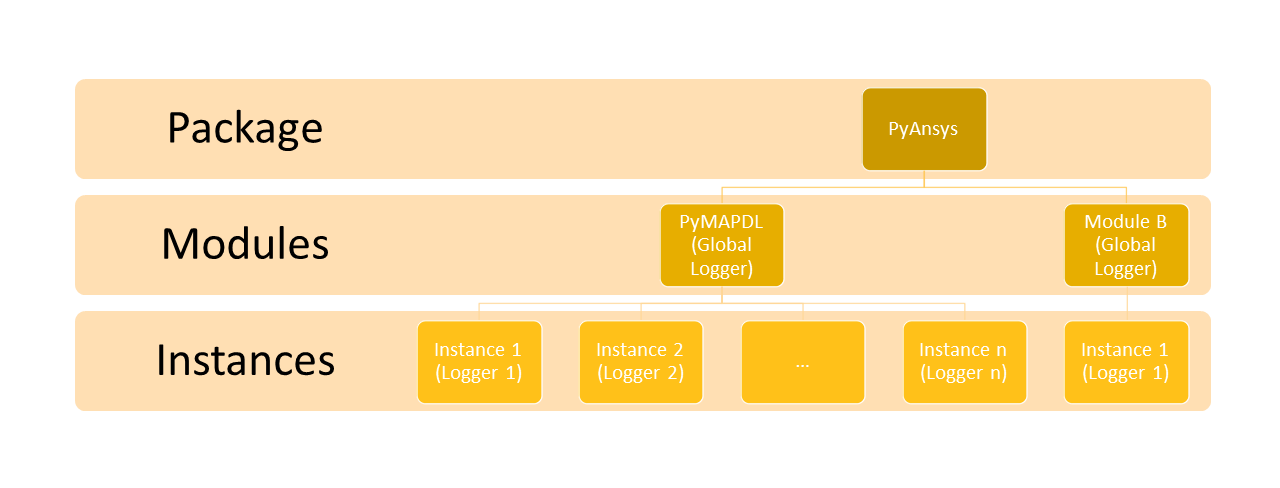

For a PyAnsys library, there are two main loggers that expose or extend a service-based app:

These loggers are customized classes that wrap the logging.Logger

class from the logging module and add specific features to it. This

image shows the logging approach used by PyMAPDL and the scopes

of the global and instance loggers.

You can see the source for a custom PyAnsys logger in the first of the following

collapsible sections and in the pyansys_logging.py

file in the pyansys-dev-guide repository. The second collapsible section shows some unit tests

that show how to use this custom PyAnsys logger:

Example of a custom PyAnsys logger

"""Module for PyAnsys logging."""

from copy import copy

from datetime import datetime

import logging

from logging import CRITICAL, DEBUG, ERROR, INFO, WARN

import sys

# Default configuration

LOG_LEVEL = logging.DEBUG

FILE_NAME = "PyProject.log"

# Formatting

STDOUT_MSG_FORMAT = "%(levelname)s - %(instance_name)s - %(module)s - %(funcName)s - %(message)s"

FILE_MSG_FORMAT = STDOUT_MSG_FORMAT

DEFAULT_STDOUT_HEADER = """

LEVEL - INSTANCE NAME - MODULE - FUNCTION - MESSAGE

"""

DEFAULT_FILE_HEADER = DEFAULT_STDOUT_HEADER

NEW_SESSION_HEADER = f"""

===============================================================================

NEW SESSION - {datetime.now().strftime("%m/%d/%Y, %H:%M:%S")}

==============================================================================="""

string_to_loglevel = {

"DEBUG": DEBUG,

"INFO": INFO,

"WARN": WARN,

"WARNING": WARN,

"ERROR": ERROR,

"CRITICAL": CRITICAL,

}

class InstanceCustomAdapter(logging.LoggerAdapter):

"""Keeps the reference to a product instance name dynamic.

If you use the standard approach, which is supplying ``extra`` input

to the logger, you would need to keep inputting product instances

every time a log is created.

Using adapters, you just need to specify the product instance that you refer

to once.

"""

# level is kept for compatibility with ``suppress_logging``,

# but it does nothing.

level = None

file_handler = None

stdout_handler = None

def __init__(self, logger, extra=None):

self.logger = logger

self.extra = extra

self.file_handler = logger.file_handler

self.std_out_handler = logger.std_out_handler

def process(self, msg, kwargs):

"""Get instance_name for logging."""

kwargs["extra"] = {}

# These are the extra parameters sent to log

# here self.extra is the argument pass to the log records.

kwargs["extra"]["instance_name"] = self.extra.get_name()

return msg, kwargs

def log_to_file(self, filename=FILE_NAME, level=LOG_LEVEL):

"""Add file handler to logger.

Parameters

----------

filename : str, optional

Name of the file where the logs are recorded. By default

``PyProject.log``

level : str, optional

Level of logging, for example ``'DEBUG'``. By default

``logging.DEBUG``.

"""

self.logger = add_file_handler(

self.logger, filename=filename, level=level, write_headers=True

)

self.file_handler = self.logger.file_handler

def log_to_stdout(self, level=LOG_LEVEL):

"""Add standard output handler to the logger.

Parameters

----------

level : str, optional

Level of logging record. By default ``logging.DEBUG``.

"""

if self.std_out_handler:

raise Exception("Stdout logger already defined.")

self.logger = add_stdout_handler(self.logger, level=level)

self.std_out_handler = self.logger.std_out_handler

def setLevel(self, level="DEBUG"):

"""Change the log level of the object and the attached handlers."""

self.logger.setLevel(level)

for each_handler in self.logger.handlers:

each_handler.setLevel(level)

self.level = level

class PyAnsysPercentStyle(logging.PercentStyle):

"""Log message formatting."""

def __init__(self, fmt, *, defaults=None):

self._fmt = fmt or self.default_format

self._defaults = defaults

def _format(self, record):

defaults = self._defaults

if defaults:

values = defaults | record.__dict__

else:

values = record.__dict__

# Here you can make any changes that you want in the record. For

# example, adding a key.

# You could create an ``if`` here if you want conditional formatting, and even

# change the record.__dict__.

# If you don't want to create conditional fields, it is fine to keep

# the same MSG_FORMAT for all of them.

# For the case of logging exceptions to the logger.

values.setdefault("instance_name", "")

return STDOUT_MSG_FORMAT % values

class PyProjectFormatter(logging.Formatter):

"""Customized ``Formatter`` class used to overwrite the defaults format styles."""

def __init__(

self,

fmt=STDOUT_MSG_FORMAT,

datefmt=None,

style="%",

validate=True,

defaults=None,

):

if sys.version_info[1] < 8:

super().__init__(fmt, datefmt, style)

else:

# 3.8: The validate parameter was added

super().__init__(fmt, datefmt, style, validate)

self._style = PyAnsysPercentStyle(fmt, defaults=defaults) # overwriting

class InstanceFilter(logging.Filter):

"""Ensures that instance_name record always exists."""

def filter(self, record):

"""If record had no attribute instance_name, create it and populate with empty string."""

if not hasattr(record, "instance_name"):

record.instance_name = ""

return True

class Logger:

"""Logger used for each PyProject session.

This class lets you add a handler to a file or standard output.

Parameters

----------

level : int, optional

Logging level to filter the message severity allowed in the logger.

The default is ``logging.DEBUG``.

to_file : bool, optional

Write log messages to a file. The default is ``False``.

to_stdout : bool, optional

Write log messages into the standard output. The

default is ``True``.

filename : str, optional

Name of the file where log messages are written to.

The default is ``None``.

"""

file_handler = None

std_out_handler = None

_level = logging.DEBUG

_instances = {}

def __init__(

self,

level=logging.DEBUG,

to_file=False,

to_stdout=True,

filename=FILE_NAME,

cleanup=True,

):

"""Initialize Logger class."""

self.logger = logging.getLogger("pyproject_global") # Creating default main logger.

self.logger.addFilter(InstanceFilter())

self.logger.setLevel(level)

self.logger.propagate = True

self.level = self.logger.level # noqa: TD002, TD003 # TODO: TO REMOVE

# Writing logging methods.

self.debug = self.logger.debug

self.info = self.logger.info

self.warning = self.logger.warning

self.error = self.logger.error

self.critical = self.logger.critical

self.log = self.logger.log

if to_file or filename != FILE_NAME:

# We record to file.

self.log_to_file(filename=filename, level=level)

if to_stdout:

self.log_to_stdout(level=level)

self.add_handling_uncaught_expections(

self.logger

) # Using logger to record unhandled exceptions.

self.cleanup = cleanup

def log_to_file(self, filename=FILE_NAME, level=LOG_LEVEL):

"""Add file handler to logger.

Parameters

----------

filename : str, optional

Name of the file where the logs are recorded. By default FILE_NAME

level : str, optional

Level of logging. E.x. 'DEBUG'. By default LOG_LEVEL

"""

self = add_file_handler(self, filename=filename, level=level, write_headers=True)

def log_to_stdout(self, level=LOG_LEVEL):

"""Add standard output handler to the logger.

Parameters

----------

level : str, optional

Level of logging record. By default LOG_LEVEL

"""

self = add_stdout_handler(self, level=level)

def setLevel(self, level="DEBUG"):

"""Change the log level of the object and the attached handlers."""

self.logger.setLevel(level)

for each_handler in self.logger.handlers:

each_handler.setLevel(level)

self._level = level

def _make_child_logger(self, suffix, level):

"""Create a child logger.

Create a child logger either using ``getChild`` or copying

attributes between ``pyproject_global`` logger and the new

one.

"""

logger = logging.getLogger(suffix)

logger.std_out_handler = None

logger.file_handler = None

if self.logger.hasHandlers:

for each_handler in self.logger.handlers:

new_handler = copy(each_handler)

if each_handler == self.file_handler:

logger.file_handler = new_handler

elif each_handler == self.std_out_handler:

logger.std_out_handler = new_handler

if level:

# The logger handlers are copied and changed the

# loglevel if the specified log level is lower

# than the one of the global.

if each_handler.level > string_to_loglevel[level.upper()]:

new_handler.setLevel(level)

logger.addHandler(new_handler)

if level:

if isinstance(level, str):

level = string_to_loglevel[level.upper()]

logger.setLevel(level)

else:

logger.setLevel(self.logger.level)

logger.propagate = True

return logger

def add_child_logger(self, suffix, level=None):

"""Add a child logger to the main logger.

This logger is more general than an instance logger which is designed

to track the state of the application instances.

If the logging level is in the arguments, a new logger with a

reference to the ``_global`` logger handlers is created

instead of a child.

Parameters

----------

suffix : str

Name of the logger.

level : str

Level of logging

Returns

-------

logging.logger

Logger class.

"""

name = self.logger.name + "." + suffix

self._instances[name] = self._make_child_logger(self, name, level)

return self._instances[name]

def _add_product_instance_logger(self, name, product_instance, level):

if isinstance(name, str):

instance_logger = InstanceCustomAdapter(

self._make_child_logger(name, level), product_instance

)

elif isinstance(name, None):

instance_logger = InstanceCustomAdapter(

self._make_child_logger("NO_NAMED_YET", level), product_instance

)

else:

raise TypeError(f"``name`` parameter must be a string or None, not f{type(name)}")

return instance_logger

def add_instance_logger(self, name, product_instance, level=None):

"""Create a logger for an application instance.

This instance logger is a logger with an adapter which add the

contextual information such as <product/service> instance

name. This logger is returned and you can use it to log events

as a normal logger. It is also stored in the ``_instances``

attribute.

Parameters

----------

name : str

Name for the new logger

product_instance : ansys.product.service.module.ProductClass

Class instance. This must contain the attribute ``name``.

Returns

-------

InstanceCustomAdapter

Logger adapter customized to add additional information to

the logs. You can use this class to log events in the

same way you would with a logger class.

Raises

------

TypeError

You can only input strings as ``name`` to this method.

"""

count_ = 0

new_name = name

while new_name in logging.root.manager.__dict__.keys():

count_ += 1

new_name = name + "_" + str(count_)

self._instances[new_name] = self._add_product_instance_logger(

new_name, product_instance, level

)

return self._instances[new_name]

def __getitem__(self, key):

"""Define custom KeyError message."""

if key in self._instances.keys():

return self._instances[key]

else:

raise KeyError(f"There are no instances with name {key}")

def add_handling_uncaught_expections(self, logger):

"""Redirect the output of an exception to the logger."""

def handle_exception(exc_type, exc_value, exc_traceback):

if issubclass(exc_type, KeyboardInterrupt):

sys.__excepthook__(exc_type, exc_value, exc_traceback)

return

logger.critical("Uncaught exception", exc_info=(exc_type, exc_value, exc_traceback))

sys.excepthook = handle_exception

def __del__(self):

"""Close the logger and all its handlers."""

self.logger.debug("Collecting logger")

if self.cleanup:

try:

for handler in self.logger.handlers:

handler.close()

self.logger.removeHandler(handler)

except Exception:

try:

if self.logger is not None:

self.logger.error("The logger was not deleted properly.")

except Exception:

pass

else:

self.logger.debug("Collecting but not exiting due to 'cleanup = False'")

def add_file_handler(logger, filename=FILE_NAME, level=LOG_LEVEL, write_headers=False):

"""Add a file handler to the input.

Parameters

----------

logger : logging.Logger or logging.Logger

Logger where to add the file handler.

filename : str, optional

Name of the output file. By default FILE_NAME

level : str, optional

Level of log recording. By default LOG_LEVEL

write_headers : bool, optional

Record the headers to the file. By default ``False``.

Returns

-------

logger

Return the logger or Logger object.

"""

file_handler = logging.FileHandler(filename)

file_handler.setLevel(level)

file_handler.setFormatter(logging.Formatter(FILE_MSG_FORMAT))

if isinstance(logger, Logger):

logger.file_handler = file_handler

logger.logger.addHandler(file_handler)

elif isinstance(logger, logging.Logger):

logger.file_handler = file_handler

logger.addHandler(file_handler)

if write_headers:

file_handler.stream.write(NEW_SESSION_HEADER)

file_handler.stream.write(DEFAULT_FILE_HEADER)

return logger

def add_stdout_handler(logger, level=LOG_LEVEL, write_headers=False):

"""Add a stream handler to the logger.

Parameters

----------

logger : logging.Logger or logging.Logger

Logger where to add the stream handler.

level : str, optional

Level of log recording. By default ``logging.DEBUG``.

write_headers : bool, optional

Record the headers to the stream. By default ``False``.

Returns

-------

logger

The logger or Logger object.

"""

std_out_handler = logging.StreamHandler(sys.stdout)

std_out_handler.setLevel(level)

std_out_handler.setFormatter(PyProjectFormatter(STDOUT_MSG_FORMAT))

if isinstance(logger, Logger):

logger.std_out_handler = std_out_handler

logger.logger.addHandler(std_out_handler)

elif isinstance(logger, logging.Logger):

logger.addHandler(std_out_handler)

if write_headers:

std_out_handler.stream.write(DEFAULT_STDOUT_HEADER)

return logger

How to use the PyAnsys custom logger

"""Test for PyAnsys logging."""

import io

import logging

from pathlib import Path

import sys

import weakref

import pyansys_logging

def test_default_logger():

"""Create a logger with default options.

Only stdout logger must be used.

"""

capture = CaptureStdOut()

with capture:

test_logger = pyansys_logging.Logger()

test_logger.info("Test stdout")

assert "INFO - - test_pyansys_logging - test_default_logger - Test stdout" in capture.content

# File handlers are not activated.

assert (Path.cwd() / "PyProject.log").exists()

def test_level_stdout():

"""Create a logger with default options.

Only stdout logger must be used.

"""

capture = CaptureStdOut()

with capture:

test_logger = pyansys_logging.Logger(level=logging.INFO)

test_logger.debug("Debug stdout with level=INFO")

test_logger.info("Info stdout with level=INFO")

test_logger.warning("Warning stdout with level=INFO")

test_logger.error("Error stdout with level=INFO")

test_logger.critical("Critical stdout with level=INFO")

# Modify the level

test_logger.setLevel(level=logging.WARNING)

test_logger.debug("Debug stdout with level=WARNING")

test_logger.info("Info stdout with level=WARNING")

test_logger.warning("Warning stdout with level=WARNING")

test_logger.error("Error stdout with level=WARNING")

test_logger.critical("Critical stdout with level=WARNING")

# level=INFO

assert (

"DEBUG - - test_pyansys_logging - test_level_stdout - Debug stdout with level=INFO"

not in capture.content

)

assert (

"INFO - - test_pyansys_logging - test_level_stdout - Info stdout with level=INFO"

in capture.content

)

assert (

"WARNING - - test_pyansys_logging - test_level_stdout - Warning stdout with level=INFO"

in capture.content

)

assert (

"ERROR - - test_pyansys_logging - test_level_stdout - Error stdout with level=INFO"

in capture.content

)

assert (

"CRITICAL - - test_pyansys_logging - test_level_stdout - Critical stdout with level=INFO"

in capture.content

)

# level=WARNING

assert (

"INFO - - test_pyansys_logging - test_level_stdout - Info stdout with level=WARNING"

not in capture.content

)

assert (

"WARNING - - test_pyansys_logging - test_level_stdout - Warning stdout with level=WARNING"

in capture.content

)

assert (

"ERROR - - test_pyansys_logging - test_level_stdout - Error stdout with level=WARNING"

in capture.content

)

assert (

"CRITICAL - - test_pyansys_logging - test_level_stdout - Critical stdout with level=WARNING" # noqa: E501

in capture.content

)

# File handlers are not activated.

assert (Path.cwd() / "PyProject.log").exists()

def test_file_handlers(tmpdir):

"""Activate a file handler different from `PyProject.log`."""

file_logger = tmpdir.mkdir("sub").join("test_logger.txt")

test_logger = pyansys_logging.Logger(to_file=True, filename=file_logger)

test_logger.info("Test Misc File")

with Path.open(file_logger, "r") as f:

content = f.readlines()

assert Path.exists(file_logger) # The file handler is not the default PyProject.Log

assert len(content) == 6

assert "NEW SESSION" in content[2]

assert (

"==============================================================================="

in content[3]

)

assert "LEVEL - INSTANCE NAME - MODULE - FUNCTION - MESSAGE" in content[4]

assert "INFO - - test_pyansys_logging - test_file_handlers - Test Misc File" in content[5]

# Delete the logger and its file handler.

test_logger_ref = weakref.ref(test_logger)

del test_logger

assert test_logger_ref() is None

class CaptureStdOut:

"""Capture standard output with a context manager."""

def __init__(self):

self._stream = io.StringIO()

def __enter__(self):

"""Runtime context is entered."""

sys.stdout = self._stream

def __exit__(self, type, value, traceback):

"""Runtime context is exited."""

sys.stdout = sys.__stdout__

@property

def content(self):

"""Return the captured content."""

return self._stream.getvalue()

Global logger#

A global logger named py*_global is created when importing

ansys.product.service (ansys.product.service.__init__). This logger

does not track instances but rather is used globally. Consequently, its use

is recommended for most scenarios, especially those where simple modules

or classes are involved.

For example, if you intend to log the initialization of a library or module, import the global logger at the top of your script or module:

from ansys.product.service import LOG

If the default name of the global logger is in conflict with the name of another logger, rename it:

from ansys.product.service import LOG as logger

The default logging level of the global logger is ERROR (logging.ERROR).

You can change the output to a different error level like this:

LOG.logger.setLevel("DEBUG")

LOG.file_handler.setLevel("DEBUG") # if present

LOG.stdout_handler.setLevel("DEBUG") # if present

Alternatively, to ensure that all handlers are set to the desired log level, use this approach:

LOG.setLevel("DEBUG")

By default, the global logger does not log to a file. However, you can enable logging to both a file and the standard output by adding a file handler:

import os

file_path = os.path.join(os.getcwd(), "pylibrary.log")

LOG.log_to_file(file_path)

If you want to change the characteristics of the global logger from the beginning of

the execution, you must edit the __init__ file in the directory of your

library.

To log using the global logger, simply call the desired method as a normal logger:

>>> import logging

>>> from ansys.mapdl.core.logging import Logger

>>> LOG = Logger(level=logging.DEBUG, to_file=False, to_stdout=True)

>>> LOG.debug("This is LOG debug message.")

| Level | Instance | Module | Function | Message

|----------|------------|--------------|-------------|---------------------------

| DEBUG | | __init__ | <module> | This is LOG debug message.

Instance logger#

An instance logger is created every time that the _MapdlCore class is

instantiated. Using this instance logger is recommended when using the pool

library or when using multiple instances of MAPDL. The main feature of the instance

logger is that it tracks each instance and includes the instance name when logging.

The names of instances are unique. For example, when using the MAPDL gRPC

version, the instance name includes the IP and port of the corresponding instance,

making the logger unique.

You can access instance loggers in two places:

_MapdlCore._logfor backward compatibilityLOG._instances, which is a field of thedictdata type with a key that is the name of the created logger

These instance loggers inherit from the pymapdl_global output handlers and

logging level unless otherwise specified. An instance logger works similarly to

the global logger. If you want to add a file handler, use the log_to_file

method. If you want to change the log level, use the logging.Logger.setLevel()

method.

This code snippet shows how to use an instance logger:

>>> from ansys.mapdl.core import launch_mapdl

>>> mapdl = launch_mapdl()

>>> mapdl._log.info("This is an useful message")

| Level | Instance | Module | Function | Message

|----------|-----------------|----------|-------------|--------------------------

| INFO | 127.0.0.1:50052 | test | <module> | This is an useful message

Ansys product loggers#

An Ansys product, due to its architecture, can have several loggers. The

logging library supports working with a finite number of loggers. The

logging.getLogger() factory function helps to access each logger by its name. In

addition to name mappings, a hierarchy can be established to structure the

loggers’ parenting and their connections.

For example, if an Ansys product is using a pre-existing custom logger encapsulated inside the product itself, the PyAnsys library benefits from exposing it through the standard Python tools. You should use the standard library as much as possible. It facilitates every contribution to the PyAnsys library, both external and internal, by exposing common tools that are widely adopted. Each developer is able to operate quickly and autonomously. The project takes advantage of the entire set of features exposed in the standard logger and all the upcoming improvements.

Custom log handlers#

You might need to catch Ansys product messages and redirect them to another logger. For example, Ansys Electronics Desktop (AEDT) has its own internal logger called the message manager, which has three main destinations:

Global which is for the entire project manager

Project, which is related to the project

Design, which is related to the design, making it the most specific destination of the three loggers

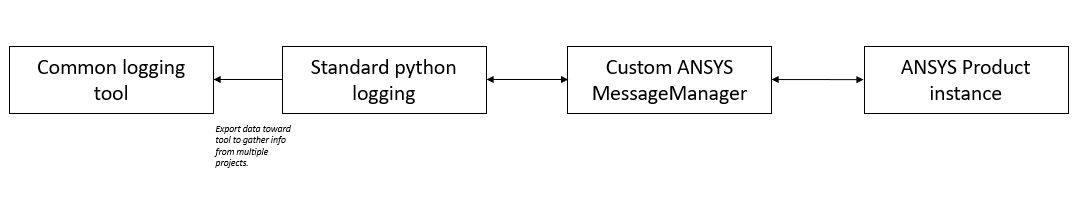

The message manager does not use the standard Python logging module, which

can be a problem when exporting messages and data from it to a common tool.

In most cases, it is easier to work with the standard Python module to extract

data. To overcome this AEDT limitation, you must wrap the existing message

manager into a logger based on the standard logging library:

The wrapper implementation is essentially a custom handler based on a

class inherited from the logging.Handler class. The initializer

of this class requires the message manager to be passed as an argument to link the standard

logging service with the AEDT message manager:

class LogHandler(logging.Handler):

def __init__(self, internal_app_messenger, log_destination, level=logging.INFO):

logging.Handler.__init__(self, level)

# destination is used if when the internal message manager

# is made of several different logs. Otherwise it is not relevant.

self.destination = log_destination

self.messenger = internal_app_messenger

def emit(self, record):

pass

The purpose of the logging.Handler class is to send log messages in the

AEDT logging stream. One of the mandatory actions is to overwrite the emit()

function. This function operates as a proxy, dispatching all log messages to the

message manager. Based on the record level, the message is sent to the appropriate

log level, such as INFO, ERROR, or DEBUG, into the message manager to

fit the level provided by the Ansys product. As a reminder, the record is an object

containing all kind of information related to the event logged.

This custom handler is use in the new logger instance (the one based on the

logging library). To avoid any conflict or message duplication, before adding

a handler on any logger, verify if an appropriate handler is already available.